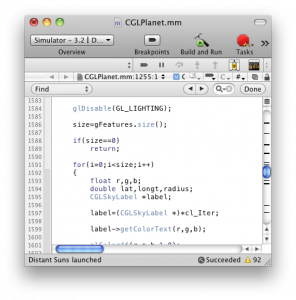

A guest post by Mike Smithwick, author of Distant Suns 2 for the iPhone/iPad and blogger at distantsuns.com. Mike is a seasoned iPhone/iPad developer who has developed numerous iPhone applications. He has recently released Distant Suns for iPad. Follow Mike’s work on Twitter for more information.

Credit: Distant Suns

Another in a very occasional series of columns covering the craft of programming and what it takes to create one of those app things.

Even though this article is about computer languages it is not meant at all to teach one how to speak in any of these languages, for that is light-years beyond the scope of this article.

A computer program is likened to a recipe. A recipe with potentially millions of steps that could come crashing down in a smoldering heap of code if as much as one of those steps is in the wrong order. The recipe might tell the system that if the user does something, then load in an image, draw it to the screen in green, rotate three times, do the hokey-pokey and fade it out. And that might be just one little task of thousands in a complex web of tasks, actions and behaviors.

As with any kitchen recipe, there is a specific lingo invoked that serves as a precise form of shorthand that the iPhone can understand as well as the programmer. And it is this shorthand that forms the basis of a computer language.

The earliest computers were programmed at the lowest level, in bits and bytes; frequently hand-entered by switches on the front of the machine, paper tape or even punch cards. It was a system that was extremely tedious, highly error prone and very hard to read. Back to the kitchen analogy: think of the instruction to take a cup of flour and mix it with one egg. Short and to the point. But the earliest machines didnt know what flour, a cup or an egg might be. So the recipe would now have to actually instruct how to make a measuring cup, how to grow and harvest wheat, then grind it up into flour, and well, you get the point. There had to be a better way, and as a result, FORTRAN (FORMula TRANslator) was invented in 1954 at IBM. Considered the first modern computer language, it used a combination of basic mathematical symbols, punctuation and simple English words to describe program flow. Instead of having to describe how to raise wheat, the system now already would understand what wheat really was. Very quickly other languages were developed such as COBOL, LISP and ALGOL. And many other that are still in use today.

Why so many languages? Languages are generally tailored to different tasks. One might be science-oriented such as FORTRAN, and another database-oriented such as SQL. Apples OS-X and iPhone OS are programmed in what had generally been a somewhat obscure language called Objective-C.

Read the rest of this article…

Here is another cool light toy that puts on a trippy show in your…

Plenty of us record high resolution videos on our iPhone or Android device. You are…

In order to connect a SSD to your smartphone, you either have to plug it…

We all have old devices that are just sitting around, collecting dust. The iFramix Pro…

In the past few months, we have covered plenty of apps that help you take…

Getting stuck without power is not ideal. There are plenty of power banks that can…